Synthetic Users, Real Decisions: How to Use AI Test Panels to Pre‑Validate UX and Campaigns

The Future of UX and Campaign Research: Synthetic Users Meet Rapid Validation

Organizations face relentless demand to ship user-centric features and winning campaigns—faster than ever. Yet traditional research workflows can slow innovation, straining timelines and budgets. In 2025, a transformative solution is quietly going mainstream: synthetic users AI research for pre-validation of UX, copy, and marketing concepts. Leveraging AI-powered test panels—virtual personas capable of simulating feedback at scale—teams can screen ideas with unprecedented speed and then focus real-user validation where it counts.

What Are Synthetic Users, and Why Now?

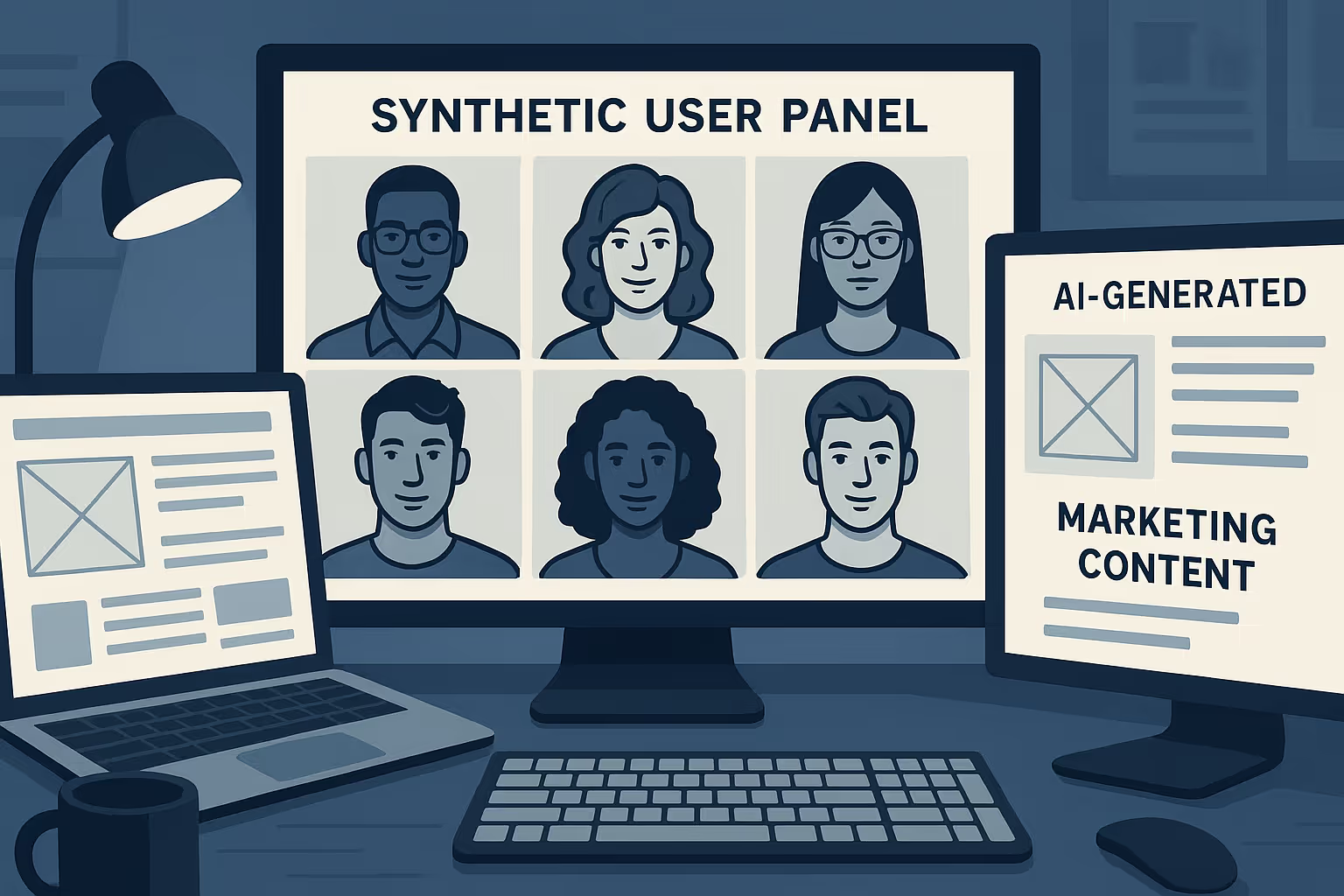

Synthetic users are AI-generated virtual respondents designed to mimic the attitudes, knowledge, and behaviors of target user segments. Unlike analytic bots for system testing, these are built to provide plausible, nuanced feedback to UX flows, information architecture, pricing tests, and messaging.

Why does this matter in 2025?

- AI training data and modeling techniques now support lifelike, demographically diverse personas.

- Platforms like Statsig, Synthetic Users, MOSTLY AI, Native AI, and Fairgen make it easy to deploy synthetic panels in hours, not weeks.

- Leading teams realize measurable gains: faster iteration, smarter project triage, and better go-to-market outcomes.

Use Case: Squeezing Research Timelines, Not Research Quality

The main driver for synthetic users AI research: run first-pass tests in hours—not days—enabling teams to prioritize human validation, preempt flawed concepts, and see quantifiable ROI.

Step-by-Step: Deploying AI Test Panels

- Define Personas and Guardrails with real data.

- Generate Synthetic Panels with leading tools, ensuring privacy and bias checks.

- Design and Run Tests (e.g., preference polls, clickthroughs, copy feedback).

- Analyze Results Using AI Rubrics, segment by persona, summarize themes.

- Decide What Merits Real-User Validation; always calibrate with human feedback.

- Calibrate by comparing synthetic vs. real user results to prevent bias.

Best Use Cases and Limitations:Ideal: Early idea screening, optimizing copy or variants, scaling qualitative feedback, initial pricing.Not Suitable: Accessibility nuance, emotional/group dynamics, finding edge cases.

Metrics:

- Prediction Accuracy (alignment with real users)

- Time-to-Insight

- Cost per Learning (synthetic panels much lower)

- Win Rate Uplift (A/B test improvements)

Key Pitfalls:

- Mode Collapse (averaged, repetitive results): refresh data and prompts regularly.

- Overconfidence: Always confirm with real users.

- Ethics & Bias: Use platforms with mitigation tools.

Hybrid Model: Combine synthetic panels with small-n real user testing and telemetry for most robust result.

Prompt Templates:

- Create synthetic personas representing your target demographic.

- Simulate accessibility-focused feedback on signup buttons.

- Simulate first-time user navigating a payment flow.

Making the Most of Synthetic User Panels:

- View as accelerators, not replacements.

- Calibrate with humans and analytics.

- Use robust metrics.

- Stay updated on tools and ethics.

References and further reading:

- UX Collective: Synthetic Users in UX

- Nielsen Norman Group: Synthetic Users

- MOSTLY AI, Fairgen, Synthetic Users Platform, Native AI.

%20(900%20x%20350%20px)%20(2)%20(1)%201%20(1).svg)

%20(900%20x%20350%20px)%20(4).png)